Let’s put our radical hats on and envision a complete repurposing of our current software and network infrastructure.

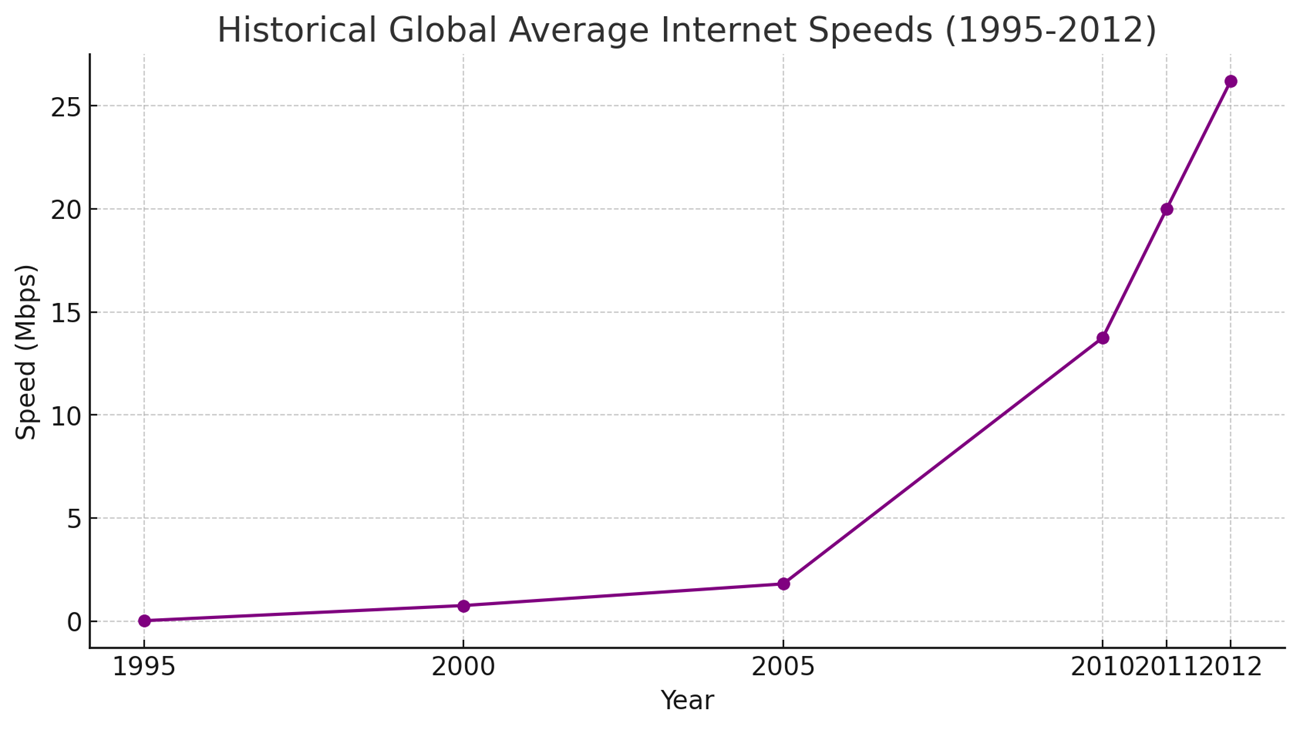

It’s only been about 50 years since the majority of households got a phone in the US. The copper wire that delivered a phone call we now call a “landline,” but they were just called “lines” back then. (I think it wasn’t until the movie The Matrix came out a few years ago that people really focused on the difference. Because in that movie, landlines had special powers over cellular lines which enabled a human to travel in and out of the matrix. But I digress…). While our landlines served their purpose, we all remember how internet usage literally exploded when we repurposed our landlines using modems, finding a new framework for our existing network that nobody had imagined when they first built it. Since then we have improved, improved, and improved some more. Soon, it seems, everyone on the globe will have fast internet speeds with fairly low network latency. Will our application lag problem evaporate into the wind when the global network infrastructure catches up, much like our local computer multitasking lag problem dissipated when computing systems got faster and software got rewritten?

“But it works on my local machine…”

To understand the impact of network lag, as developers, we might first consider an evaluation of how our applications are performing on our local machines, and by this I am referring to when we develop an application in a sandbox on a single computer, using local resources for everything—server, database, assets. Most times, developers elect to keep the networking aspect intact in the local environment, meaning, we leave the normal network trips that our application uses as-is. On a local computer, that network trip is infinitely small (it never leaves the computer) and the latency is tiny too. So it would seem that our local machine of today gives us a glimpse into the performance on the networks of the far future, where we project that speed and latency will be virtually conquered. Do our applications scream with performance in this environment?

Usually the answer is yes, but it’s more complicated than that. Sometimes applications on our local environment, with exclusive use of resources, still suffer from lag and immediacy issues. Network isn’t our only problem. Sometimes our applications drag away due to software design issues. Slow database queries and contention for resources, especially under heavy load conditions, can be nearly as damaging to a brisk user experience as waiting on the network was. Often these new problems are just as unpredictable as network conditions are. We are now realizing that the network trip itself is expensive not just because of the network itself, but because of the resulting server load and contention issues. And sadly, we can’t rely on network performance increases to solve our problem. Even a fast, futuristic network will lag at times. Due to the distributed nature from the internet’s inception, it was designed from the ground up to be robust over performant.

So what can we do?

We don’t want to spend our days optimizing and testing and hoping. We want to get on with the creative process and create “as fast as we can think.” Might there be sweeping software solutions that will simply end these problems, or at least contain them to the point of inconsequence? We might be inclined to consider solutions that are quite radical in fact, even if they force changes to the way we work and think about software development, in exchange for obtaining that holy grail, consistent and reliable immediacy.

Well, what if the problem of database queries could be hyper-compartmentalized, to the point where they are seldom blocking the main thread of experience? And what if they could be limited to only when absolutely necessary, bundled up together, in advance when possible, so as to make them sent in one package, one efficient trip? And about those contended resources, could they be generated and delivered in the same singular, compartmentalized way, and then cached on the client side, so that they would never make the trip again? Could the entire app initially be delivered as a singular payload alongside other resources? Downloaded apps on our phone achieve some of these things today, but only partially, and not in an organized way. Apps over-rely on the prowess of the developer too, and also lend to those expensive optimize, test, and hope cycles.

Nothing is faster than not making a network trip at all

We must completely rethink how we use the “copper wires” of software infrastructure today. The most effective way to combat lag, it appears, is to completely eliminate the network trip, thereby mirroring the brisk “1-tier” experience as closely as possible. When network trips are unavoidable, they must be rigorously managed, controlled, and confined at the framework level, with a primary focus on predictability and speed above all else. Since the problem is not just about network, but about server resource and data contention, we discover a fundamental principle: Nothing is faster than not making a network trip at all. Even in the future.